Overview

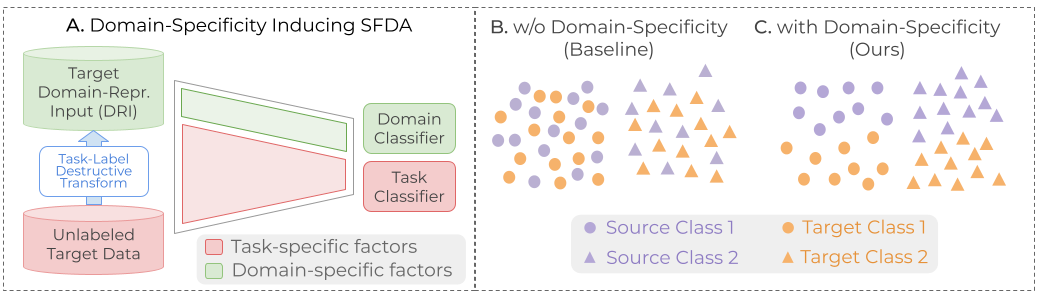

We analyze Source-Free Domain Adaptation (SFDA) from the perspective of domain specificity, and

show that disentangling and learning the domain-specific and task-specific factors in a Vision Transformer

(ViT) architecture leads to improved target adaptation.

Abstract

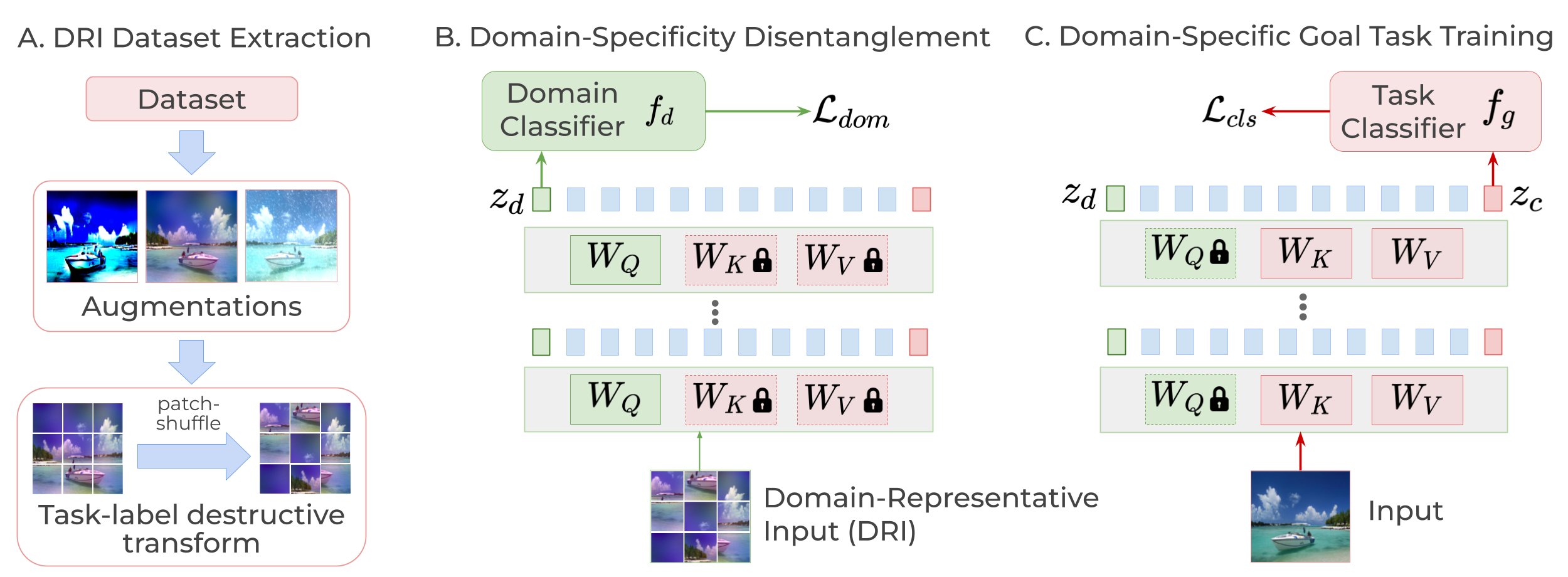

Conventional Domain Adaptation (DA) methods aim to learn domain-invariant feature

representations to improve the target adaptation performance. However, we motivate

that domain-specificity is equally important since in-domain trained models hold

crucial domain-specific properties that are beneficial for adaptation. Hence, we propose

to build a framework that supports disentanglement and learning of domain-specific

factors and task-specific factors in a unified model. Motivated by the success of

vision transformers in several multi-modal vision problems, we find that queries could

be leveraged to extract the domain-specific factors. Hence, we propose a novel

Domain-Specificity inducing Transformer (DSiT) framework for disentangling and learning

both domain-specific and task-specific factors. To achieve disentanglement, we propose to

construct novel Domain-Representative Inputs (DRI) with domain-specific information to train

a domain classifier with a novel domain token. We are the first to utilize vision transformers

for domain adaptation in a privacy-oriented source-free setting, and our approach achieves

state-of-the-art performance on single-source, multi-source, and multi-target benchmarks.

Proposed Approach

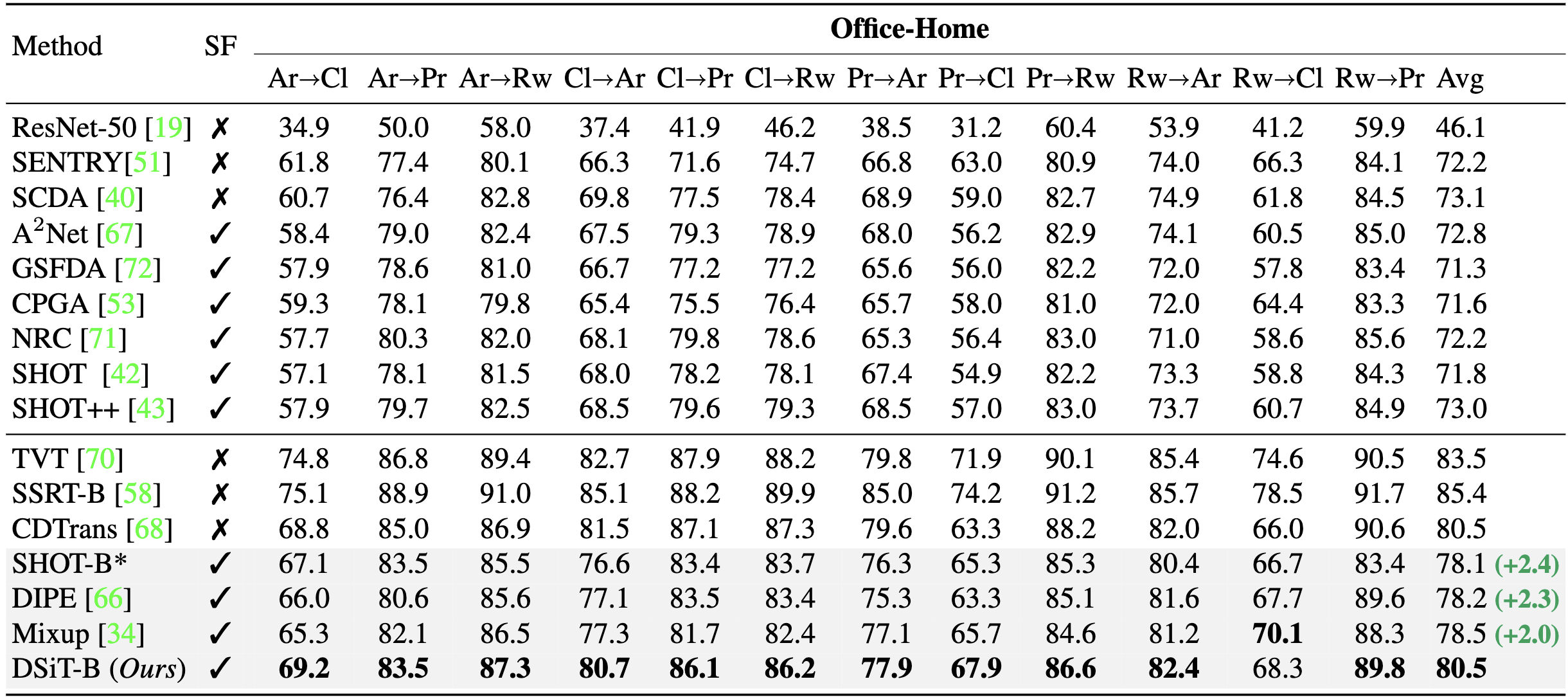

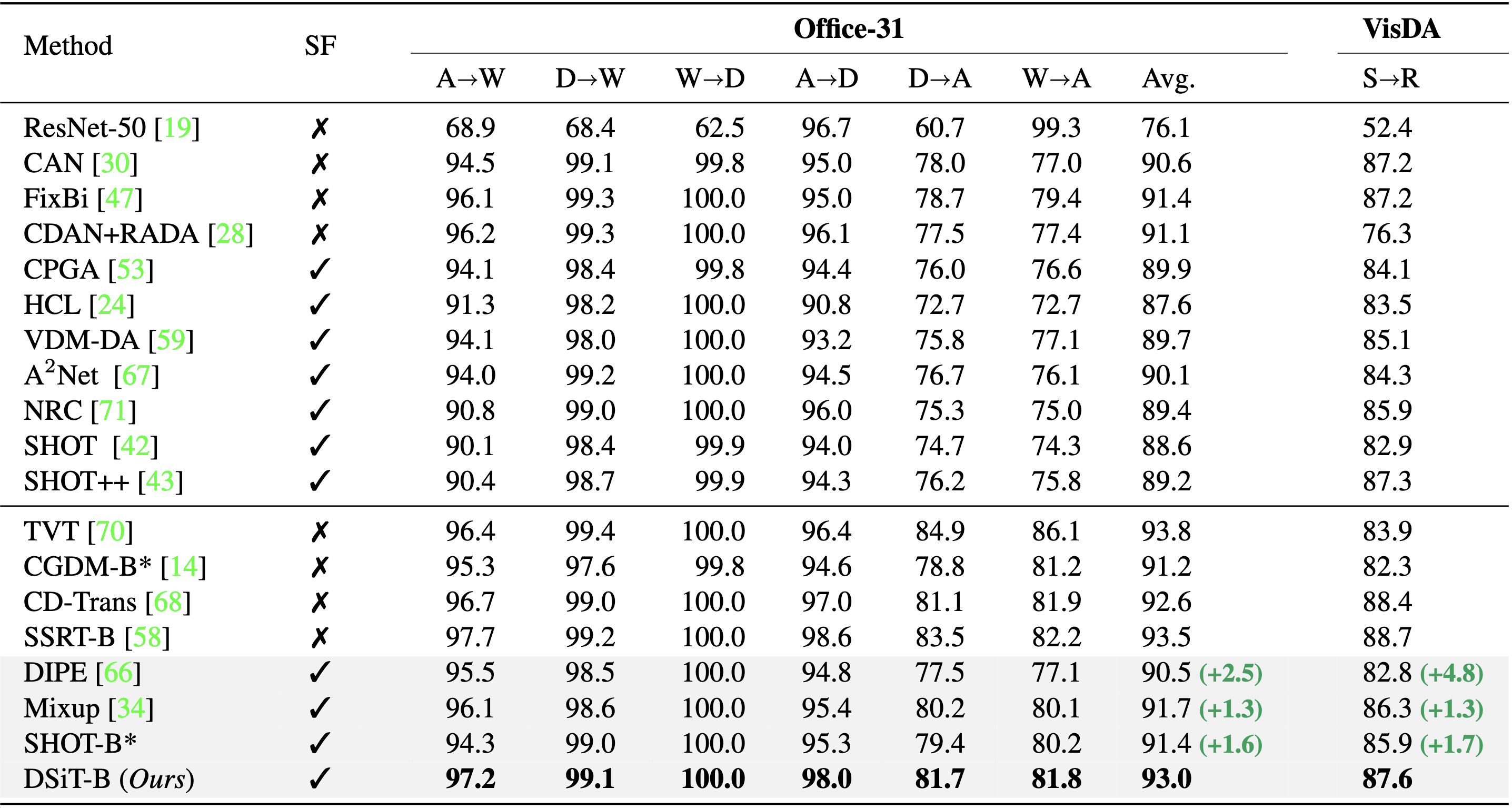

Main Results

1. Single-source DA on Office-Home

2. Single-source DA on Office-31 and VisDA

Citing our work

@inproceedings{sanyal2023domain,

title={Domain-Specificity Inducing Transformers for Source-Free Domain Adaptation},

author={Sanyal, Sunandini and Asokan, Ashish Ramayee and Bhambri, Suvaansh and Kulkarni, Akshay and Kundu, Jogendra Nath and Babu, R Venkatesh},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={18928--18937},

year={2023}

}